📎 Integrating Reasoning and Vector RAG

Limitations of Reasoning-Based RAG

- Retrieval Speed: Reasoning-based retrieval can be slower due to the need for LLM reasoning.

- Summary-Based Selection: Relying solely on summaries may result in the loss of important details present in the original content.

Vector-Based Node Retrieval

To address these limitations, we can leverage vector-based retrieval at the node level:

- Chunking: Each node is divided into several smaller chunks.

- Vector Search: The query is used to search for the top-K most relevant chunks.

- Node Scoring: For each retrieved chunk, we identify its parent node. The relevance score for a node is calculated by aggregating the similarity scores of its associated chunks.

Example Node Scoring Rule

Let N be the number of content chunks associated with a node, and let ChunkScore(n) be the relevance score of chunk n. The Node Score is computed as:

- The sum aggregates relevance from all related chunks.

- The +1 inside the square root ensures the formula handles nodes with zero chunks.

- Dividing by N (the mean) would normalize the score, but it would ignore the number of relevant chunks, treating nodes with many and few relevant chunks equally.

- Using the square root in the denominator allows the score to increase with the number of relevant chunks, but with diminishing returns. This rewards nodes with more relevant chunks, while preventing large nodes from dominating due to quantity alone.

- This scoring favors nodes with fewer, highly relevant chunks over those with many weakly relevant ones.

Note: In this framework, we retrieve nodes based on their associated chunks, but do not return the chunks themselves.

Integration with Reasoning-Based Retrieval

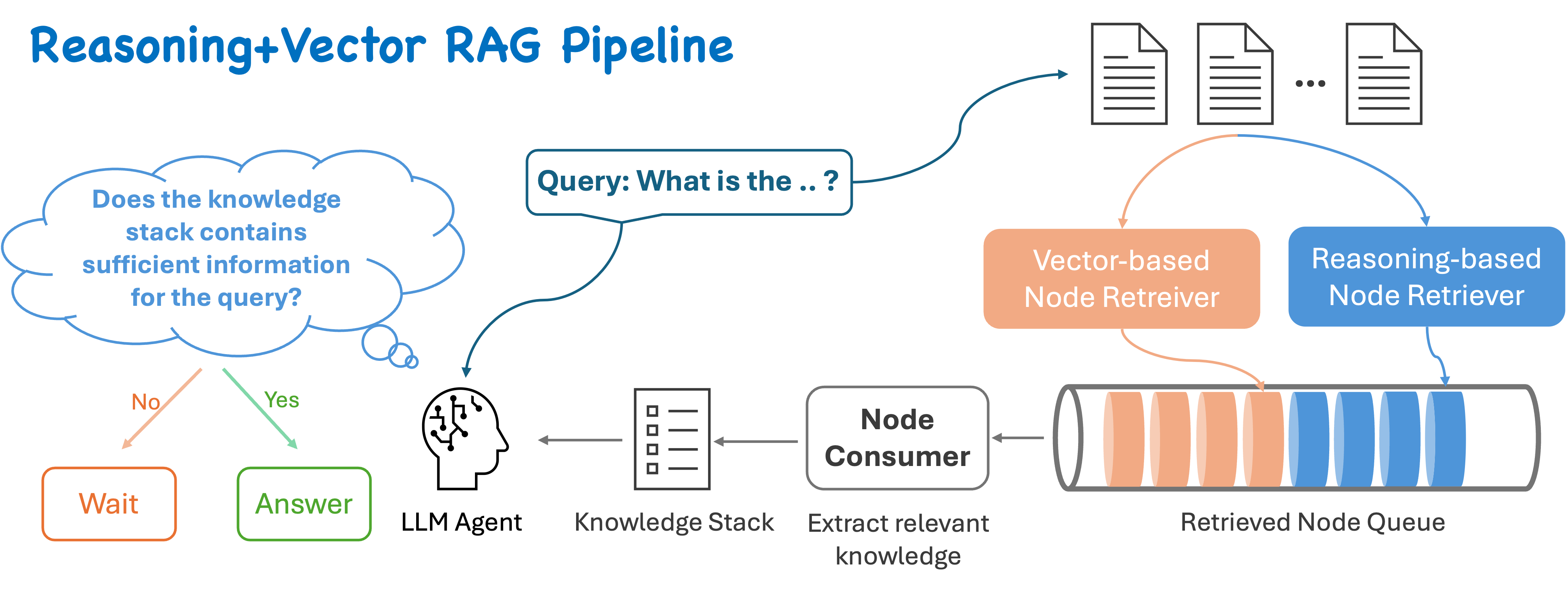

To leverage the strengths of both approaches:

- Parallel Retrieval: Perform vector-based node search and reasoning-based node search at the same time.

- Queue System: Maintain a queue of unique node elements. As nodes are returned from either search, add them to the queue only if they are not already present.

- Node Consumer: A consumer processes nodes from the queue, extracting or summarizing relevant information from each node.

- LLM Agent: The agent continually evaluates whether enough information has been gathered. If so, the process can terminate early.

Benefits:

- Combines the speed of vector-based retrieval with the depth of reasoning-based methods.

- Achieves higher recall than either approach alone by leveraging their complementary strengths.

- Delivers relevant results quickly, without sacrificing accuracy or completeness.

- Scales efficiently for large document collections and complex queries.

🚀 The Retrieve API is coming soon!

📢 Stay Tuned!

We are continuously updating our documentation with new examples and best practices.

Last updated: 2025/05/01